目次

概要

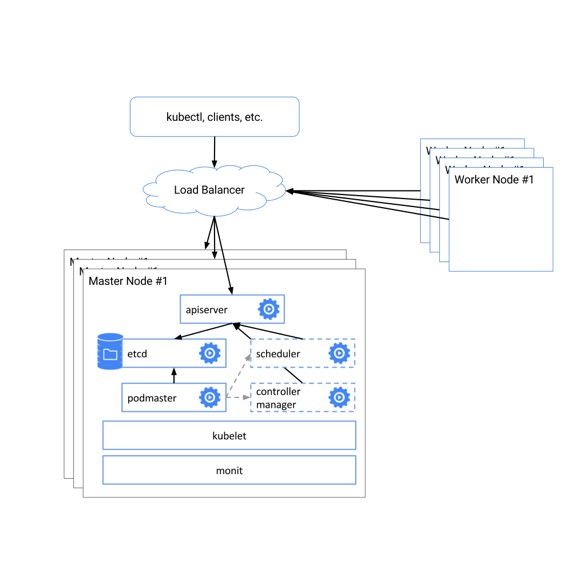

kubernetes のMasterを3台動作させ、LBが動作するデザインを作成。

etcd以外はkubeadmを使用してインストールする。

環境

公式より

| ソフトウェア | バージョン |

|---|---|

| Kubernetes | 1.10.2 |

| Docker | 18.03.1-ce |

| Etcd | 3.2.19 |

| Master/Nodes | CentOS 7.4 |

| Calico | v3.1.1 |

| ノード名 | IP | 役割 |

|---|---|---|

| k8s-lb | 10.16.181.200 | Load balancer |

| k8s-master1 | 10.16.181.201 | master |

| k8s-master2 | 10.16.181.202 | master |

| k8s-master3 | 10.16.181.203 | master |

| k8s-node1 | 10.16.181.206 | worker |

| k8s-node2 | 10.16.181.207 | worker |

証明書の作成

ここを参考にetcdをインストールするためのTLS証明書を作成。

ここでは、k8s-master1にて証明書作成

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o cfssl

chmod +x cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o cfssljson

chmod +x cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o cfssl-certinfo

chmod +x cfssl-certinfo

mkdir cert

cd cert

ca-csr.jsonを作る

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "<country>",

"ST": "<state>",

"L": "<city>",

"O": "<organization>",

"OU": "<organization unit>"

}]

}

この設定をもとにca.csr,ca.pem,ca-key.pemを以下のコマンドで作成

../cfssl gencert -initca ca-csr.json | ../cfssljson -bare ca

これをもとにetcdで使う証明書の作成。

まずは、設定ファイルから。

ca-config.json

{

"signing": {

"default": {

"expiry": "168h"

},

"profiles": {

"server": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

etcd.json

{

"CN": "etcd",

"hosts": [

"k8s-master1", "10.16.181.201",

"k8s-master2", "10.16.181.202",

"k8s-master3", "10.16.181.203"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "<country>",

"ST": "<state>",

"L": "<city>",

"O": "<organization>",

"OU": "<organization unit>"

}

]

}

etcd-client.json

{

"CN": "etcd-client",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "<country>",

"ST": "<state>",

"L": "<city>",

"O": "<organization>",

"OU": "<organization unit>"

}

]

}

etcd-peer.json

{

"CN": "etcd",

"hosts": [

"k8s-master1", "10.16.181.201"

"k8s-master2", "10.16.181.202"

"k8s-master3", "10.16.181.203"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "<country>",

"ST": "<state>",

"L": "<city>",

"O": "<organization>",

"OU": "<organization unit>"

}

]

}

設定ファイルをもとに証明書を作成.

../cfssl gencert -ca=ca.pem -ca-key=ca-key.pem --config=ca-config.json -profile=server etcd.json | ../cfssljson -bare etcd

../cfssl gencert -ca=ca.pem -ca-key=ca-key.pem --config=ca-config.json -profile=peer etcd-peer.json | ../cfssljson -bare etcd-peer

../cfssl gencert -ca=ca.pem -ca-key=ca-key.pem --config=ca-config.json -profile=client etcd-client.json | ../cfssljson -bare etcd-client

これにより、certフォルダ内に証明書が以下のように作成される

ca-key.pem

ca.pem

etcd-client-key.pem

etcd-client.pem

etcd-key.pem

etcd-peer-key.pem

etcd-peer.pem

etcd.pem

全ノードのセットアップ

/etc/hostsの編集

各ノード間で名前解決ができるように/etc/hostsを編集。

10.16.181.200 k8s-lb k8s-lb

10.16.181.201 k8s-master1 k8s-master1

10.16.181.202 k8s-master2 k8s-master2

10.16.181.203 k8s-master3 k8s-master3

10.16.181.206 k8s-node1 k8s-node1

10.16.181.207 k8s-node2 k8s-node2

Firwallのポート開放

各ノードにて必要なポートを開放。

Workerでは、etcd/kube-scheduler/kube-controllerのポートは不要。

LBでは、6443のみが必要

firewall-cmd --permanent --add-port=179/tcp # calico BGP port

firewall-cmd --permanent --add-port=6443/tcp # k8s API server

firewall-cmd --permanent --add-port=2079/tcp # etcd api

firewall-cmd --permanent --add-port=2080/tcp # etcd api

firewall-cmd --permanent --add-port=10250/tcp # kubelete api

firewall-cmd --permanent --add-port=10251/tcp # kube-scheduler

firewall-cmd --permanent --add-port=10252/tcp # kube-controller

firewall-cmd --permanent --add-port=10255/tcp # kubelet api

firewall-cmd --reload

NTPの設定

この環境ではNTPサーバが10.127.1.131上で動いているので、設定は以下の通り。

yum install -y ntp

cat <<EOF > /etc/ntp.conf

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noquery

restrict 127.0.0.1

restrict ::1

server 10.127.1.131 iburst

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

EOF

systemctl enable ntpd

systemctl start ntpd

Timezone

Asiz/Tokyoに設定

timedatectl set-timezone Asia/Tokyo

SELINUXの無効化

sed -i "s/^SELINUX=.*/SELINUX=disabled/" /etc/selinux/config

setenforce 0

Masterでetcdのセットアップ

etcd v3.2.19をダウンロードして、PATHが通っている場所へインストール。

証明書も必要となるので、k8s-master1上で作成したファイルを他のk8s-master2,k8s-master3にコピーしておく。

cd /tmp/

curl https://github.com/coreos/etcd/releases/download/v3.2.19/etcd-v3.2.19-linux-amd64.tar.gz -O

cp /tmp/etcd-v3.2.19-linux-amd64/etcd /usr/bin/

cp /tmp/etcd-v3.2.19-linux-amd64/etcdctl /usr/bin/

useradd -r -s /sbin/nologin etcd

chmod +x /usr/bin/etcd

chmod +x /usr/bin/etcdctl

mkdir -p /var/lib/etcd/

mkdir -p /etc/etcd/ssl

chown etcd:etcd /var/lib/etcd/

chown root:etcd -R /etc/etcd/ssl

コピーした証明書を/etc/etcd/sslに移動。

cp ca.pem etcd-client-key.pem etcd-client.pem etcd-key.pem etcd-peer-key.pem etcd-peer.pem etcd.pem /etc/etcd/ssl/

chmod 644 /etc/etcd/ssl/*.pem

etcd用のサービスを定義。ここでは、k8s-master1用を掲載。

適宜IPアドレスなどは変更が必要。

cat <<EOF > /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

EnvironmentFile=-/etc/default/etcd

User=etcd

ExecStart=/usr/bin/etcd \

--name k8s-master1 \

--data-dir /var/lib/etcd/ \

--listen-client-urls https://10.16.181.201:2379,https://127.0.0.1:2379 \

--advertise-client-urls https://10.16.181.201:2379 \

--listen-peer-urls https://10.16.181.201:2380 \

--initial-advertise-peer-urls https://10.16.181.201:2380 \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--client-cert-auth \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd-peer.pem \

--peer-key-file=/etc/etcd/ssl/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--initial-cluster k8s-master1=https://10.16.181.201:2380,k8s-master2=https://10.16.181.202:2380,k8s-master3=https://10.16.181.203:2380 \

--initial-cluster-state=new

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

etcdサービスの起動

systemctl daemon-reload

systemctl enable etcd.service

systemctl start etcd.service

etcdが起動できたか確認。

[root@k8s-master1 ~]# etcdctl --cert-file=/etc/etcd/ssl/etcd-peer.pem --ca-file=/etc/etcd/ssl/ca.pem --key-file=/etc/etcd/ssl/etcd-peer-key.pem --endpoints=https://10.16.181.201:2379,https://10.16.181.202:2379,https://10.16.181.203:2379 member list

422daac13e841503: name=k8s-master1 peerURLs=https://10.16.181.201:2380 clientURLs=https://10.16.181.201:2379 isLeader=false

af544521443fe1af: name=k8s-master3 peerURLs=https://10.16.181.203:2380 clientURLs=https://10.16.181.203:2379 isLeader=true

feb847e845fa5680: name=k8s-master2 peerURLs=https://10.16.181.202:2380 clientURLs=https://10.16.181.202:2379 isLeader=false

K8s Master/Node 上でk8sのインストール

Dockerのインストール

ここを参考にインストール

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install docker-ce

systemctl start docker

systemctl enable docker

Kubeadmのインストール

ここを参考にインストール

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet kubeadm kubectl

sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

kubeadmによるMasterのセットアップ

kubeadm用のコンフィグを作成。

cat <<EOF > /tmp/kubeconfig

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

apiServerCertSANs:

- "10.16.181.200"

- "10.16.181.201"

- "10.16.181.202"

- "10.16.181.203"

- "k8s-lb"

- "k8s-master1"

- "k8s-master2"

- "k8s-master3"

- "k8s-node1"

- "k8s-node2"

etcd:

endpoints:

- "https://10.16.181.201:2379"

- "https://10.16.181.202:2379"

- "https://10.16.181.203:2379"

caFile: "/etc/etcd/ssl/ca.pem"

certFile: "/etc/etcd/ssl/etcd-client.pem"

keyFile: "/etc/etcd/ssl/etcd-client-key.pem"

networking:

podSubnet: 192.168.0.0/16

kubernetesVersion: v1.10.2

featureGates:

CoreDNS: true

apiServerExtraArgs:

apiserver-count: "3"

k8s-master1にてまずkubeadm init --config=/tmp/kubeconfigを実行

kubeadm init --config=/tmp/kubeconfig

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

k8s-master2,k8s-master3にて証明書用のフォルダを作成

mkdir /etc/kubernetes/pki/

k8s-master1から作成された証明書をk8s-master2,k8s-master3にコピーする.

scp -r /etc/kubernetes/pki/* k8s-master2:/etc/kubernetes/pki/

scp -r /etc/kubernetes/pki/* k8s-master3:/etc/kubernetes/pki/

scp /tmp/kubeconfig k8s-master2:/tmp/

scp /tmp/kubeconfig k8s-master3:/tmp

k8s-master2,k8s-master3においても同様にkubeadm init --config=/tmp/kubeconfigを行う

rm /etc/kubernetes/pki/apiserver.*

kubeadm init --config=/tmp/kubeconfig

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadmによるNodeのセットアップ

k8s-master1のkubeadm init --config=/tmp/kubeconfigで得られた以下のようなjoinコマンドを使ってノードをクラスタに追加する

k8s-node1,k8s-node2にログインして下記コマンドを実行。

kubeadm join 10.16.181.201:6443 --token rm3dts.ega79cp37moyp9te --discovery-token-ca-cert-hash sha256:162d6442dca5b002ffb672524ccba

1d4f0575d1f68b32adfee06852c6e49af5b

これらが終了すると、kubectl get nodeですべてのノードが見える

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady master 1h v1.10.2

k8s-master2 NotReady master 1h v1.10.2

k8s-master3 NotReady master 58m v1.10.2

k8s-node1 NotReady <none> 34m v1.10.2

k8s-node2 NotReady <none> 35m v1.10.2

CNIのインストールをしていないので、StatusはNotReadyのまま。

LBのセットアップ

k8s-lbにてhaproxyをインストール

yum install -y haproxy

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.org

cat <<EOF > /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2 info

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 256

user haproxy

group haproxy

daemon

defaults

mode tcp

log global

option tcplog

timeout connect 10s

timeout client 30s

timeout server 30s

frontend http-in

bind *:80

mode http

stats enable

stats auth admin:adminpassword

stats hide-version

stats show-node

stats refresh 60s

stats uri /haproxy?stats

frontend k8s

bind *:6443

mode tcp

default_backend k8s_backend

backend k8s_backend

balance roundrobin

server k8s-master1 10.16.181.201:6443 check

server k8s-master2 10.16.181.202:6443 check

server k8s-master3 10.16.181.203:6443 check

EOF

systemctl start haproxy

systemctl enable haproxy

systemctl status haproxy

LBに合わせて、kubeletのコンフィグも変更する。

kubectl -n kube-system edit configmap kube-proxy

下記のserverをLBのIPアドレスに変更する

apiVersion: v1

data:

<snip>

kubeconfig.conf: |-

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://10.16.181.200:6443

name: default

contexts:

CNIのインストール

今回はCalicoを使用。ここを参考に。

kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/rbac.yaml

curl https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/calico.yaml -O

sed -i "s/^\(.*etcd_endpoints: \).*/\1 \"https:\/\/10.16.181.201:2379,https:\/\/10.16.181.201:2379,https:\/\/10.16.181.201:2379\"/g" calico.yaml

sed -i "s/^\(.*etcd_ca:\).*/\1 \"\/calico-secrets\/etcd-ca\"/" calico.yaml

sed -i "s/^\(.*etcd_cert:\).*/\1 \"\/calico-secrets\/etcd-cert\"/" calico.yaml

sed -i "s/^\(.*etcd_key:\).*/\1 \"\/calico-secrets\/etcd-key\"/" calico.yaml

sed -i "s/ # etcd-cert: null/ etcd-cert: `cat /etc/etcd/ssl/etcd-client.pem | base64 | tr -d '\n'`/" calico.yaml

sed -i "s/ # etcd-key: null/ etcd-key: `cat /etc/etcd/ssl/etcd-client-key.pem | base64 | tr -d '\n'`/" calico.yaml

sed -i "s/ # etcd-ca: null/ etcd-ca: `cat /etc/etcd/ssl/ca.pem | base64 | tr -d '\n'`/" calico.yaml

kubectl apply -f calico.yaml

確認

下記コマンドですべてのPodがRunningで動いていればクラスタは完成。

[root@k8s-master1 ~]# kubectl get pod -o wide --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system calico-kube-controllers-5b85d756c6-7sg22 1/1 Running 0 2h 10.16.181.207 k8s-node2

kube-system calico-node-8jwnr 2/2 Running 0 2h 10.16.181.201 k8s-master1

kube-system calico-node-rkn2j 2/2 Running 0 2h 10.16.181.202 k8s-master2

kube-system calico-node-wbgbd 2/2 Running 0 2h 10.16.181.203 k8s-master3

kube-system calico-node-z82tr 2/2 Running 0 2h 10.16.181.206 k8s-node1

kube-system calico-node-zwjj4 2/2 Running 0 2h 10.16.181.207 k8s-node2

kube-system coredns-7997f8864c-bpxdn 1/1 Running 0 3h 192.168.178.1 k8s-node1

kube-system coredns-7997f8864c-lp6hf 1/1 Running 0 3h 192.168.105.65 k8s-node2

kube-system kube-apiserver-k8s-master1.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.201 k8s-master1

kube-system kube-apiserver-k8s-master2.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.202 k8s-master2

kube-system kube-apiserver-k8s-master3.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.203 k8s-master3

kube-system kube-controller-manager-k8s-master1.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.201 k8s-master1

kube-system kube-controller-manager-k8s-master2.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.202 k8s-master2

kube-system kube-controller-manager-k8s-master3.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.203 k8s-master3

kube-system kube-proxy-92gkq 1/1 Running 0 3h 10.16.181.202 k8s-master2

kube-system kube-proxy-blq69 1/1 Running 0 3h 10.16.181.203 k8s-master3

kube-system kube-proxy-gpfhv 1/1 Running 0 3h 10.16.181.201 k8s-master1

kube-system kube-proxy-m6467 1/1 Running 0 3h 10.16.181.207 k8s-node2

kube-system kube-proxy-sp62l 1/1 Running 0 3h 10.16.181.206 k8s-node1

kube-system kube-scheduler-k8s-master1.test.ytsuboi.local 1/1 Running 1 3h 10.16.181.201 k8s-master1

kube-system kube-scheduler-k8s-master2.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.202 k8s-master2

kube-system kube-scheduler-k8s-master3.test.ytsuboi.local 1/1 Running 0 3h 10.16.181.203 k8s-master3

参考

- https://kubernetes.io/docs/setup/independent/high-availability/

- https://kubernetes.io/docs/concepts/cluster-administration/certificates/

- https://docs.docker.com/install/linux/docker-ce/centos/#install-using-the-repository

- https://kubernetes.io/docs/setup/independent/install-kubeadm/

- https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/calico